#Careers

#Careers

Artificial intelligence: Practical uses and ethical limits in science

ChatGPT, SciSpace, Anara: AI specialist Rebeca Scalco says tools need to work for scientists, not the other way around

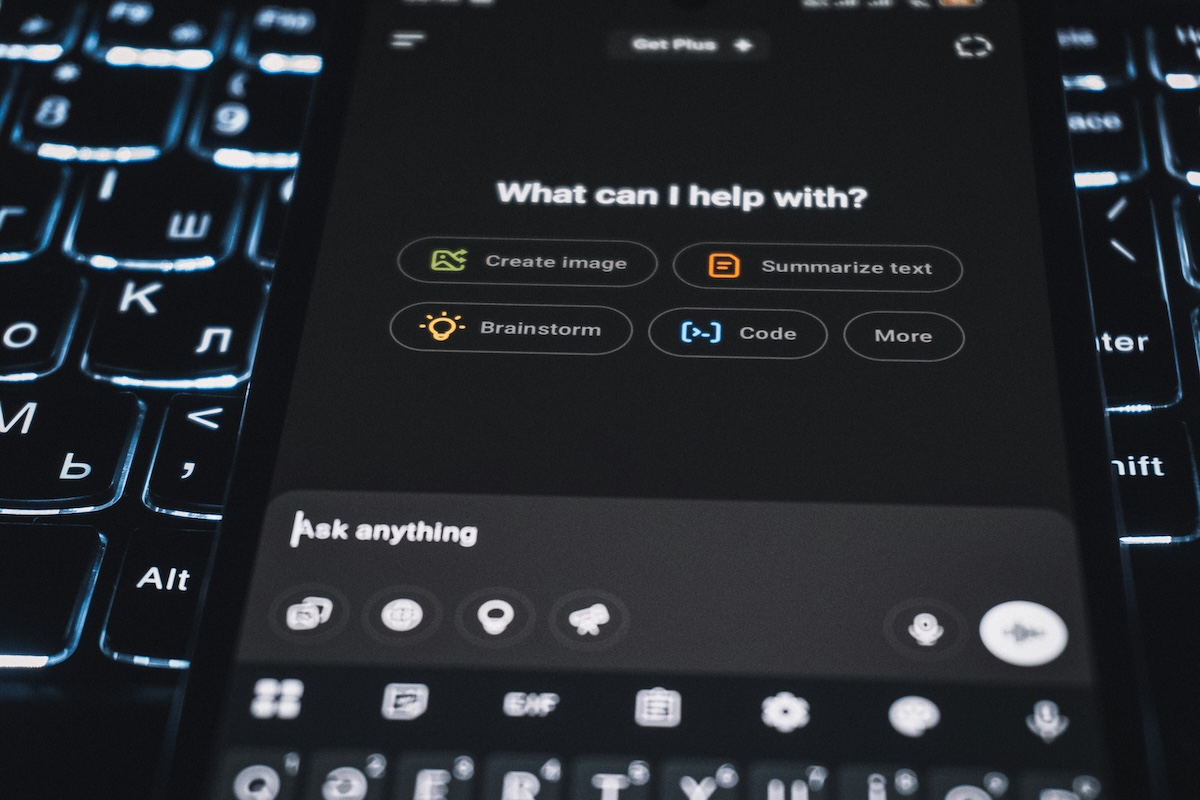

Generative AI tools like ChatGPT are used by scientists on a daily basis to help with tasks such as writing, programming, reviewing, and organizing ideas | Image: Zulfugar Karimov/Unsplash

Generative AI tools like ChatGPT are used by scientists on a daily basis to help with tasks such as writing, programming, reviewing, and organizing ideas | Image: Zulfugar Karimov/Unsplash

Long before the rise of ChatGPT, artificial intelligence (AI) was already a reality—or at least a concern. In the 1950s, British mathematician Alan Turing (1912–1954), known for his decisive role in deciphering encrypted codes in the Second World War (1939–1945), was one of the first people to publicly ask if machines could think. The term “artificial intelligence,” however, was only coined in 1956 by Americans John McCarthy (1927–2011) and Marvin Minsky (1927–2016).

Since then, much has changed. Most importantly, machines have evolved from simply executing commands to learning from them, storing and even improving knowledge. Another major leap came with the increase in access to AI, which is now in the hands of the general public and a part of everyday tasks.

“I see the democratization of AI as a powerful shift—the tool is now in the hands of the users for the first time,” says veterinarian Rebeca Scalco, who is studying a PhD in digital pathology and bioinformatics at the University of Bern, Switzerland.

Specializing in areas such as predictive modeling, bioinformatics applied to animal health, and new genetic sequencing technologies, Scalco uses machine learning and data analysis in her research.

More recently, she decided to share what is known about AI in the form of products and services for scientists.

This includes two ebooks offering tips and solutions to researchers looking to make use of AI technologies and automate writing and data analysis processes. Scalco has also begun offering mentoring and courses on the subject.

With a bachelor’s degree and a master’s from the Federal University of Pelotas (UFPel) in Rio Grande do Sul, the veterinarian first encountered advanced AI tools while doing a research fellowship at the University of California.

The project was focused on human medicine, and her role at the neuropathology lab of the institution’s Alzheimer’s Disease Research Center was to monitor brain dissections and explain causes of death.

Encountering digital pathology

It was through this role that she encountered digital pathology, a technique in which histological slides containing tissue samples are digitized and examined as high-resolution images, eliminating the need for a microscope.

“With digitalization, it is possible to apply algorithms without affecting the physical material,” explains Scalco. “Since pathology is essentially based on patterns, it is a perfect fit for machine learning.” At around the same time, ChatGPT emerged.

“I began incorporating generative AI into my academic routine. Partly for research and partly as a practical tool for organizing, writing, and programming,” says Scalco.

How to use artificial intelligence ethically in your research

-

1. Declare that AI was used

-

Like with statistical software, report which tools you used in the materials and methods section. Transparency is essential to maintaining scientific integrity.

-

2. Record the tools used in each stage

-

Use a simple spreadsheet to record dates and reasons why tools were used. This helps with organization, ensures accountability, and reduces the risk of misuse or accidental plagiarism.

-

3. Treat AI as an aid, not an author

-

Generative tools should be treated as interns: they can be very helpful, but the final work must undergo critical review and retain human authorship.

-

4. Be careful with sensitive data

-

Try not to input unpublished, personal, or confidential data into proprietary platforms. Read the tool’s terms of use and privacy policies before sharing any sensitive content.

-

5. Know your institution’s guidelines

-

Universities such as UNICAMP and UFBA have established regulations for the ethical use of AI. Check to see if your institution has any of its own protocols or recommendations.

-

6. Keep critical thinking as your compass

-

AI can speed up processes, but interpretation, analysis, and intellectual authorship remain human responsibilities. Never delegate these steps.

Tools for every step of the process

Scalco uses several AI technologies in her academic routine. ChatGPT, she says, has become indispensable for structuring texts, reviewing clarity and fluency in English, generating code snippets (in Python and R), and preparing lesson plans and presentations.

For literature review, she uses SciSpace, which makes it easier to read complex articles, and Anara, which synthesizes information and generates explanations based on original sources.

She also uses ResearchRabbit and Inciteful to visualize citation networks and identify research trends.

“Sometimes, I use Google Notebook LM to organize notes and summaries. Each tool serves a different purpose, but ChatGPT, combined with a good literature support platform, is the basis of my day-to-day work,” she says.

Ethics and limits: AI as an assistant

The use of AI in academia is now commonplace for thousands of researchers. The topic, however, still divides opinion. While some welcome it with enthusiasm, others fear it trivializes the scientific process. Scalco recognizes both sides and advocates for a balanced position.

“These tools should be treated like good interns, eager to learn. But just as a doctoral student would never submit an intern’s unreviewed text for publication, nor should they submit work done with AI without proper verification,” she warns.

Recent cases illustrate misuse:

- The journal Neurosurgical Review, published by Springer Nature, had to retract dozens of articles that had been generated by AI without declaring it.

- A book from the same publisher about machine learning was also withdrawn after the discovery of false references—so-called AI “hallucinations.”

The answer, Scalco says, is relatively simple:

“If you use AI, declare it in the materials and methods section, as we do with statistical software. I recommend using a spreadsheet to keep track of which tools you used at each stage.”

According to the researcher, some Brazilian universities, such as the University of Campinas (UNICAMP) and the Federal University of Bahia (UFBA), have made progress on this topic. “These institutions have published clear guidelines on the ethical use of AI in research and academic work.”

AI as a science accelerator

When used methodically, AI can be a powerful ally in research. Scalco highlights applications such as drafting texts, translating documents, screening articles, reviewing consistency, summarizing content, and generating initial insights.

“The tool SciSpace, for example, helps explain complex articles, but it is still the researcher who interprets or critically analyzes them,” she points out.

In fields such as bioinformatics, genomics, and big data, AI is practically indispensable.

Algorithm-based models identify genetic patterns, classify cells, predict molecular interactions, and accelerate discoveries with unprecedented precision.

“This would be impossible with classical statistics. AI does not replace researchers, but it does expand our ability to see ‘hidden’ relationships behind millions of data points,” she says.

Regulatory and geopolitical issues

Despite the progress, Scalco acknowledges that most AI platforms are controlled by large corporations with their own economic interests and limited regulation, especially in Brazil.

“When sharing sensitive data, we must ask ourselves: who is behind this technology? What are the risks? Is it really worth handing over years of research to a private company without any guarantees?” she asks.

The European Union has taken the lead with the 2024 AI Act, which establishes risk categories, transparency requirements, and human oversight.

The United Nations Educational, Scientific, and Cultural Organization (UNESCO) has issued a global recommendation on the ethical use of AI, adopted by almost 200 countries.

In Brazil, Bill 2338/2023 (known as the AI Bill), which is currently being debated in the senate, addresses governance, civil liability, and fundamental rights.

“These rules are not neutral. They are the result of disputes and lobbies,” says Scalco.

“That is why academia and civil society need to be involved in regulation, ensuring that the public interest remains central, especially in fields like health,” she argues.

For Scalco, the message is clear: AI works for the researcher and not the other way around. “People are delegating too much. AI is powerful, but it must not nullify us. Never give up on your critical thinking, your perspective, and your power to reflect,” she urges.

*

This article may be republished online under the CC-BY-NC-ND Creative Commons license.

The text must not be edited and the author(s) and source (Science Arena) must be credited.